Building on Nesa Protocol

Nesa is for any application or service that wants to use AI. You can integrate your product with Nesa in less than a minute to have access to over 3,000 AI models.

Nesa is for any application or service that wants to use AI. You can integrate your product with Nesa in less than a minute to have access to over 3,000 AI models.

Open-Sora is an initiative dedicated to efficiently producing high-quality video. By embracing open-source principles, Open-Sora provides access to advanced video generation techniques while offering a streamlined and user-friendly platform that simplifies the complexities of video generation.

Open-Sora-Plan aims to create a simple and scalable repo, to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI" ). The current code supports complete training and inference on Huawei Ascend computing system and can output video quality comparable to the highest industry standards.

Nesa's AI Link™ serves as the protocol enabling the AIT to interoperate with different blockchain networks for model, data, parameter, and computational task transfer cross-chain.

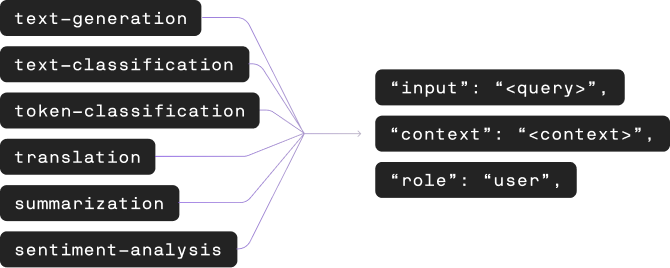

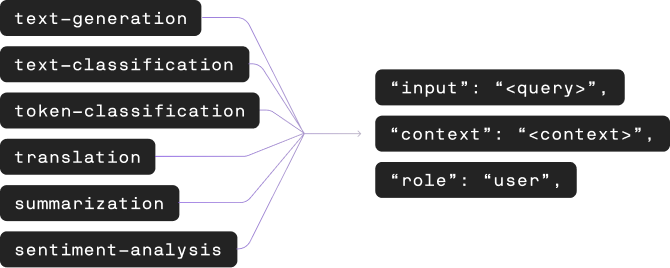

A minimal code interface for developers looking for factory inference query settings and a turnkey deployment setup on their small models or for their models that are slower to evolve.

A richly customizable AIVM Execution process to prescribe steps every node must follow, including initialization procedure, data input convention, model execution, and output handling.

Start building on Nesa by uploading your model container and bringing a node online.