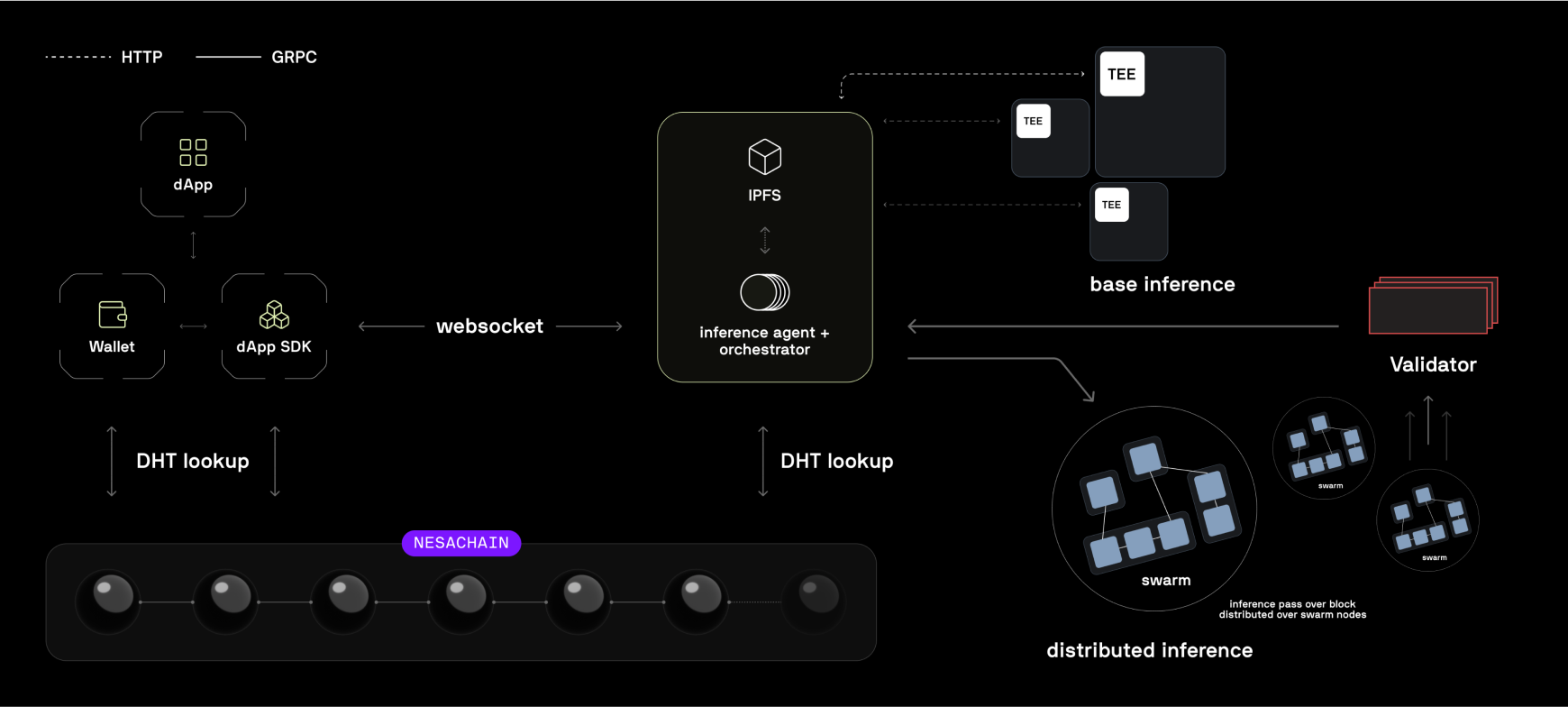

trusted execution

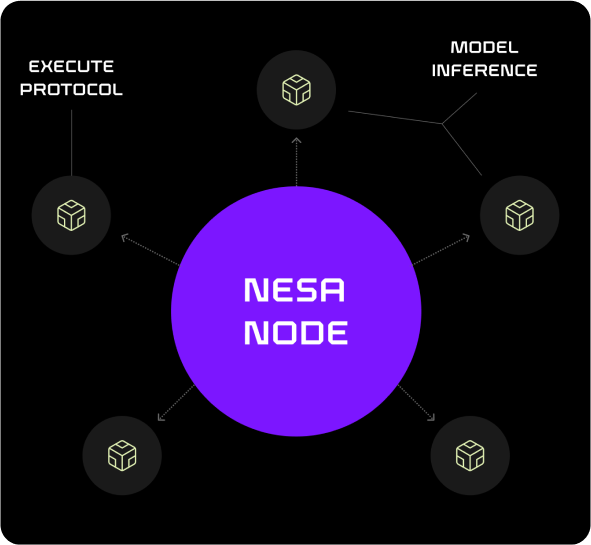

Special nodes in the network enhanced with attestation TEEs for secret share distribution.

leading privacy

SMPC for privacy-preserving computation, with ZKP schemes for verifiability.

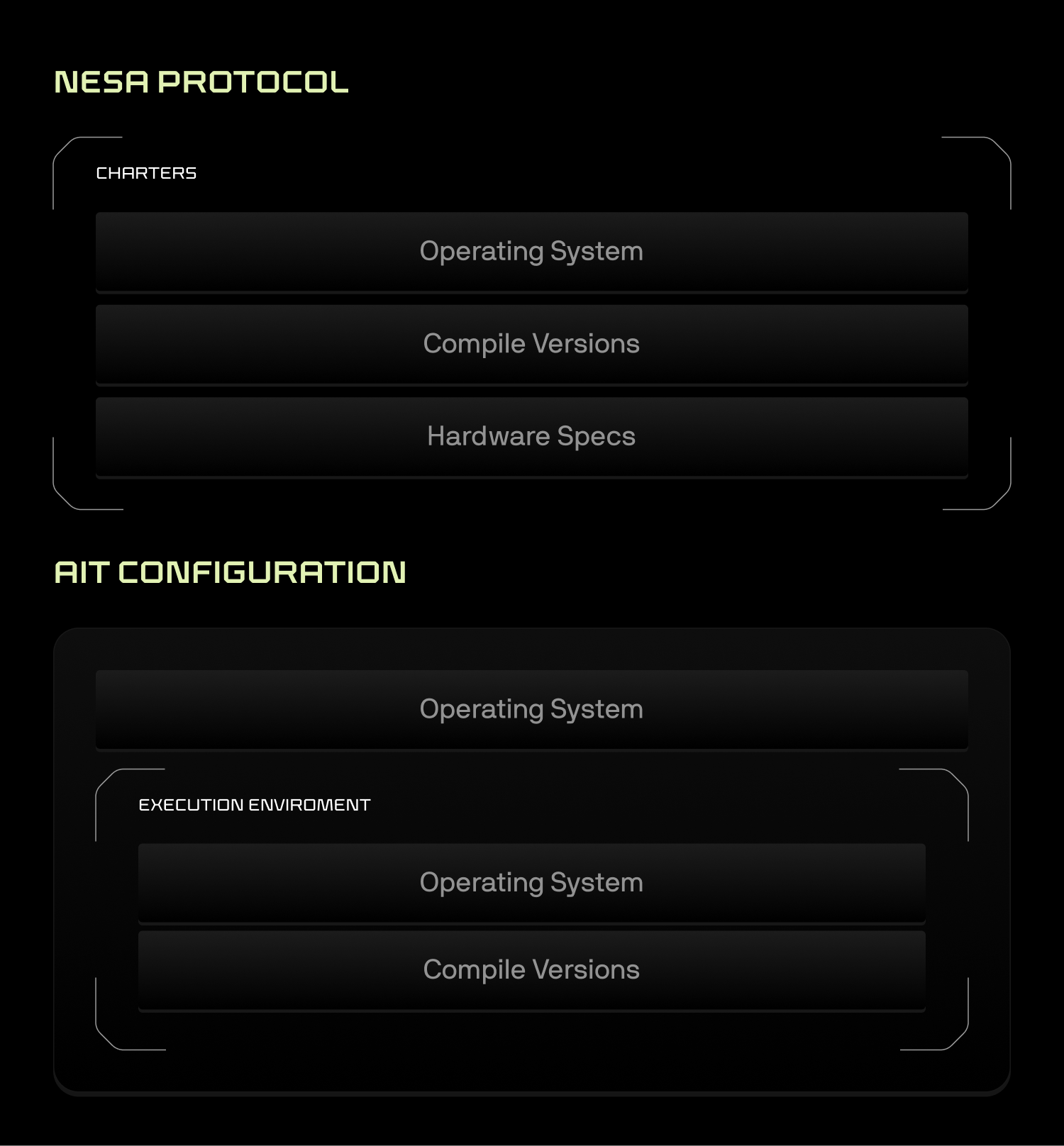

Environment Standardization

A standardized and secure execution environment for running AI inference on containerized models.

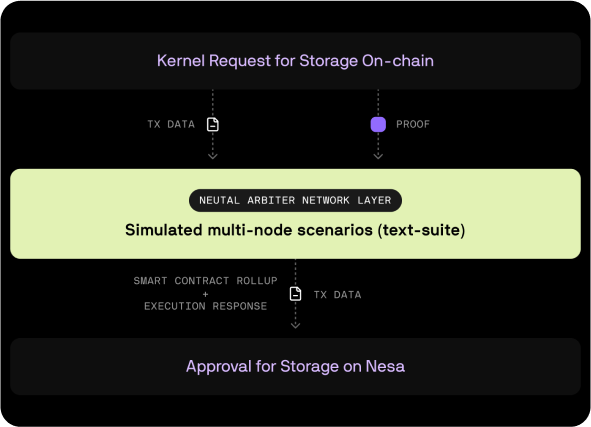

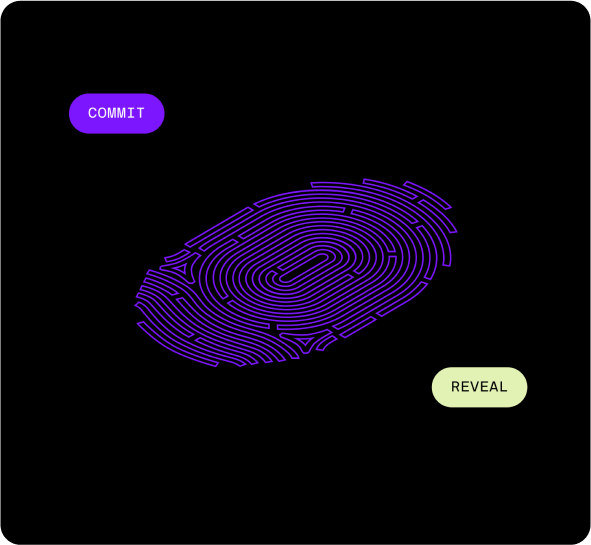

2 Phase transactions

Inference Request Queueing for high throughput and low latency execution.

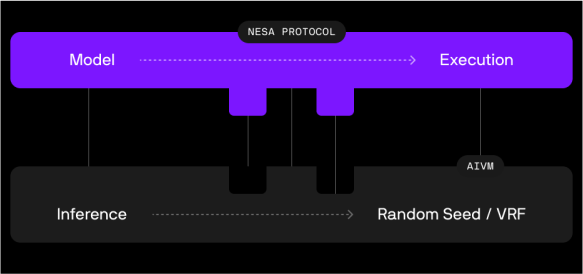

Robust Inference Committee Selection

A fair and secure methodology for inference committee selection using VRF and an organizational structure of duties.

Custom aggregation

Flexibility in how inference results are aggregated for final output.